How Do We Know Whether Our Capacity Strengthening Efforts Are Working?

A practical framework for matching your monitoring, evaluation and learning approach to your modality for capacity strengthening

Consider a common scenario: You have just completed a three-day training workshop on climate vulnerability assessments. Participant feedback is overwhelmingly positive – the facilitators were excellent, and the content was relevant. By conventional measures, the workshop was a success.

But six months later, can you demonstrate that participants are actually applying these methods in their work? Did the training translate into improved adaptation planning? Has the investment of time and resources delivered lasting value?

This is the fundamental challenge of monitoring, evaluating, and learning from capacity strengthening (CS) activities in climate adaptation research. While satisfaction surveys may be entirely appropriate for assessing a webinar, they tell us little about the effectiveness of a year-long mentorship programme. The critical question isn’t simply whether we conducted rigorous MEL, but whether we applied the right MEL approach for what we were trying to achieve.

Why One Size Doesn’t Fit All

In climate adaptation research and practice, CS goes far beyond transferring knowledge or hosting one-off trainings. It is about fostering lasting change – in how people think, collaborate and apply new ways of working on complex challenges. Understanding how this change happens, and under what conditions, requires an equally thoughtful process of monitoring, evaluation, and learning.

Before setting up a MEL system, it is essential to pause and ask a simple question: what do we want to learn? Clarity on this shapes everything that follows, from the focus of our MEL approach to the tools we choose.

As part of our work co-hosting the CS Hub within the CLARE programme, we support a mechanism called the Capacity Strengthening (CS) Hub’s Responsive Fund. The Responsive Fund was designed for experimentation and cross-project learning around emerging capacity needs within the CLARE portfolio.

A small cohort of CLARE-funded projects has been supported to test and learn from new and innovative CS approaches within their projects – from piloting mentoring models and peer exchanges to experimenting with creative learning formats that bring researchers and practitioners together.

One of our support functions is to enable the recipients of the Responsive Fund to explore, test, and experiment with MEL approaches to capture learning around their CS approaches.

Matching your MEL Approach to Respective CS Support Modalities

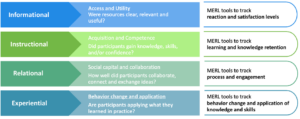

There are many ways to categorize and make sense of the plethora of CS and learning modalities. Within the CS Hub, we use four main categories to group CS modalities (see Figure 1).

Each modality group contributes to capacity strengthening in different ways and therefore requires a different MEL approach. Figure 2 illustrates how each modality group aligns with a particular MEL focus and outlines the key question guiding our evaluation.

Informational: Did we reach people effectively?

What it is: Activities that provide access to clear, relevant, and useful resources – knowledge repositories, webinars, newsletters, or guidance documents.

What success could look like: This modality is about effective dissemination and usability – people found what they needed, and it was valuable to them.

MEL approach: MEL tools track reaction and satisfaction levels. Are people accessing these resources? Do they find them helpful? Would they recommend them to colleagues?

Exemplary tools:

- Post-webinar surveys measuring clarity and relevance

- Brief feedback forms asking ‘was this useful? Will you use it?’

- Website analytics showing resource downloads and user engagement

Instructional: Are people actually learning?

What it is: Activities designed to build specific knowledge, skills and/or confidence – training programmes, workshops, online courses and technical demonstrations such as a GIS training or a course on climate risk and vulnerability assessments.

What success could look like: Participants walked away more capable than when they arrived. The key question shifts from ‘was this useful?’ to ‘did participants actually learn something?’

MEL approach: MEL tools track learning outcomes and knowledge retention.

Exemplary tools:

- Pre/post knowledge tests and surveys

- Practical skills demonstrations or assignments

Relational: Are we building connections and communities of learners?

What it is: Activities that create social capital and peer learning networks – communities of practice, peer learning exchanges, mentoring programmes and collaborative platforms.

What success could look like: Meaningful connections formed where knowledge flows between peers. Collaborative relationships outlast the formal programme. This recognises that CS happens through relationships, not just individual learning experiences.

MEL approach: MEL tools track process and engagement through qualitative and participatory measures that capture network effects.

Exemplary tools:

- Network mapping showing relationship formation over time

- Periodic reflection sessions

- Documentation of peer exchanges, problem-solving collaborations or resource sharing

Experiential: Is learning translating into action?

What it is: Activities designed to bridge the knowing-doing gap – action learning projects, embedded advisors, field placements, and coaching programmes.

What success could look like: Participants are actually applying what they’ve learned in their practice.

MEL approach: Tools to track behaviour change and application of knowledge and skills. This often requires longer-term follow up, observations in practice settings, case studies, and documentation of concrete changes in how people work.

Exemplary tools:

- Most Significant Change stories

- Implementation journals or portfolios

- 6-month follow-up interviews asking ‘what have you applied? What worked? What didn’t?’

Selecting the ‘Right’ MEL Tool

Once you know what you want to learn, how do you select the right tool? There are many factors to consider during your selection process, and many frameworks available for guidance.

Our framework is based on a set of guiding questions designed to ensure MEL tool choices are purposeful, credible, inclusive, and feasible (see Figure 3). These questions are adapted from INTRAC’s Toolkit for Small NGOs (Garbutt, n.d.) and have been tailored to the context of the CS Hub’s Responsive Fund projects.

Where to Find the Tools You Need?

If you already know what you want to learn and how to select the right tool for your needs, the next question is: where can you find it?

For comprehensive coverage across all four focus areas:

- INTRAC: Tools for M&E of Capacity Strengthening – M&E Universe, – extensive collection covering reaction, learning, engagement and application

- BetterEvaluation (especially the Rainbow Framework) – wide variety of approaches, though lighter on process and engagement tools

For tracking behaviour change and application (Experiential focus):

- Western Student Experience (Experiential Learning) focuses mainly on tools for tracking behavior change and the application of knowledge and skills.

For participatory approaches to satisfaction and engagement (Informational and Relational focus):

- Participatory Methods – collection emphasising stakeholder voice in evaluation

For broader evaluation methodology:

- UNDP – Evaluation Methodology Center – comprehensive but may require adaptation for CS contexts

- EU Evaluation Learning Portal – strong on general evaluation, lighter on CS-specific tools

Moving Forward: Getting MEL Right for your Context

By aligning your MEL strategy with the specific aims of your CS modality, you can set realistic expectations, ask the right questions, and learn from your efforts.

A webinar doesn’t need to lead to behaviour change to be successful – but it should demonstrate that it provided useful, accessible information. Conversely, an intensive mentorship programme should aim for more than participant satisfaction – it should show evidence of behaviour change.

Understanding which question to ask about the effectiveness of your CS activity helps you to evaluate more accurately and design better interventions in the future.

Do you want to explore these approaches further?

PlanAdapt continues to develop practical MEL guidance for capacity strengthening through the CLARE Capacity Strengthening Hub. We are keen to learn from others working on these challenges – if you’re experimenting with MEL for capacity strengthening, we’d love to connect and exchange experiences!